AI: Contemporary uses and questions for Audiovisual Entertainment.

This short text discusses contemporary applications for AI (artificial intelligence) machine learning agents as well as AI agent behaviour in the production, distribution, and consumption of audiovisual media. In this context, it aims to raise questions about the implications of AI based task completion.

Note: I wrote this text for a course in Film Theory at College of the Atlantic taught by Professor Colin Capers in Spring 2020. A lot has changed in the field of AI since then, and although there now exist many new and more powerful approaches to using AI in Audiovisual Entertainment and New Media, these recent developments are not reflected in this short survey-essay.

Introduction

In 2012, Google Brain researchers at Google’s semi-secret research laboratory, Google X successfully trained an artificial neural network to recognise cat images from a vast dataset of YouTube videos (John Markoff, ‘How Many Computers to Identify a Cat? 16,000’, The New York Times, 25 June 2012, sec. Technology, link 1). At the time, this milestone in machine learning garnered wide mediatic coverage, notably from the New York Times and The Verge (Ibid; Liat Clark, ‘Google’s Artificial Brain Learns to Find Cat Videos’, Wired, 26 June 2012, link 2). Today, eight years later, image recognition technology is cheaper, more versatile and widely available than ever, in fact, it is now a standard feature on most modern mobile phones, digital cameras, and laptops at no specified cost to the end-user.

Image recognition is just one of the many implementations of AI systems in modern consumer electronics. We interact with AI constantly through electronic devices, knowingly and unknowingly as we go about our day-to-day lives. AIs process the images on our smartphone cameras (Sam Byford, ‘How AI Is Changing Photography’, The Verge, 31 January 2019, link 3), model our tastes and consumer behaviours to better market products to us online (Charles Taylor, ‘Can Artificial Intelligence Eliminate Consumer Privacy Concerns For Digital Advertisers?’, Forbes, accessed 4 June 2020, link 4), determine what media to recommend to us (Joss Fong, ‘How Smart Is Today’s Artificial Intelligence?’, Vox, 19 December 2017, link 5), and fulfil many other tasks that simultaneously serve us and shape our behaviours.

The ever-increasing ubiquity of AI agents in our lives echoes the huge advancements in AI research and electronic hardware in the last decade. Most relevant to this exploration are advancements and discoveries in the field of machine learning that have led to significant changes in the way we make, consume, and think about audiovisual media. AI involvement in the production, distribution, and consumption (referred to from here on as PDC) of audiovisual media is growing as businesses, researchers, and enthusiasts train neural networks and provide them with appropriate interfaces to tackle PDC tasks. Provided it has access to the right interface, there exists an AI agent capable of carrying out every audiovisual media PDC task.

Existing AI implementations for audiovisual media PDC

While AI agents can be trained to perform any audiovisual media PDC task, the properties of the agent’s work output might differ widely from the output of a similarly instructed human worker. Generally, AI agent capabilities are measured by comparing the agent’s performance to a set of human-designed criteria. Against these criteria, AI’s fare very differently depending on their design and task. While some do very well, even occasionally surpassing human abilities altogether, others produce poor results. For the purposes of this text, AI agent performance is secondary to AI agent behaviour.

The following three sections of this text describe some notable examples of AI implementations in the production, distribution, and consumption of audiovisual content, supplemented with short descriptions and evaluations of their conduct and work output.

Audiovisual media production

1) Benjamin: screenwriting, video editing and visual effects

LSTM (long short-term memory), also known as Benjamin is an artificial recurrent neural network (Annalee Newitz, ‘Movie Written by Algorithm Turns out to Be Hilarious and Intense’, Ars Technica, 6 September 2016, link 6) partially credited for producing three short films: Sunspring (2016, starring Thomas Middleditch), It’s no game (2017, starring David Hasselhoff), and Zone Out (2018, starring Thomas Middleditch) (‘Films by Benjamin the A.I.’, Therefore Films, accessed 5 June 2020, link 7). Benjamin is credited for generating the Sunspring screenplay (Newitz, ‘Movie Written by Algorithm Turns out to Be Hilarious and Intense’), co-authoring the screenplay of It’s no game (Annalee Newitz, ‘An AI Wrote All of David Hasselhoff’s Lines in This Bizarre Short Film’, Ars Technica, 25 April 2017, link 8), and for writing and editing Zone Out (‘AI Made a Movie With a “Silicon Valley” Star—and the Results Are Horrifyingly Encouraging’, Wired, accessed 5 June 2020, link 9). Benjamin’s films are freely available for viewing online at thereforefilms.com.

For each film, the AI agent was trained on different datasets:

- To write Sunspring, writer, artist and data scientist Ross Goodwin fed it dozens (a small number for an AI dataset) of sci-fi movie scripts, resulting in absurd and ominous sci-fi-esque dialogues (Newitz, ‘Movie Written by Algorithm Turns out to Be Hilarious and Intense’.)

- For It’s no game, Goodwin had Benjamin study David Hasselhoff shows to produce Hasselhoff’s lines. While the result is nonsensical, Hasselhoff seemed genuinely moved by the script. In an article by Annalee Newitz on Ars Technica, Hasselhoff reflects: “This AI really had a handle on what's going on in my life and it was strangely emotional” (Ibid).

- To produce Zone Out, Goodwin trained Benjamin from a pool of films in the public domain (‘AI Made a Movie With a “Silicon Valley” Star—and the Results Are Horrifyingly Encouraging’). The result is a semi-coherent absurdist black and white drama with actors deepfaked over shots from traditionally produced cinematic content (Deepfakes are an AI based video manipulation technique which maps faces from a reference database onto an image sequence).

While Benjamin’s writing and editing often result in absurd dialogue and stories, its work demonstrates clear pattern recognition vis-à-vis oral and visual language in Film.

2) Watson: film trailer video editing

In 2016, 20th Century Fox partnered with IBM to produce the official film trailer for Morgan (2016) using Watson, IBM’s proprietary AI business platform (‘IBM Research Takes Watson to Hollywood with the First “Cognitive Movie Trailer”’, THINK Blog, 31 August 2016, link 10). The resulting trailer is blandly edited and generally unexciting, but it is also difficult to distinguish from many (presumably) human-directed trailers. The film flopped, and critics and audiences alike widely dismissed the film on its release.

3) Stanford & Adobe research: Computational Video Editing for Dialogue-Driven Scenes

Computational Video Editing for Dialogue-Driven Scenes is a research paper co-authored by Mackenzie Leake, Abe Davis, Anh Truong, and Maneesh Agrawala (of Stanford University and Adobe Research). The article was published in July 2017 in the journal ACM Transactions on Graphics. It presents an AI-driven system for efficiently editing video of dialogue-driven scenes. The system employs film editing idioms to instruct the AI agent to edit according to defined parameters with remarkable success. The results, with regards to their respect of editing idioms, could easily be mistaken to have been edited by an experienced human video-editor.

4) GANs: image manipulation and image sequence generation

Generative Adversarial Networks (usually abbreviated to GANs) are machine learning frameworks which employ two AI networks (often the same agent twice) competing to improve an AI’s work output for a given task (Generative Adversarial Networks (GANs) - Computerphile, accessed 5 June 2020, link 11). In this competition, one network acts as a generator, outputting information, while the other acts as a discriminator, evaluating the work submitted by the generator. The discriminator’s evaluation is then fed back into the generator as a score, allowing the generator to adjust its output and improve its work (Ibid).

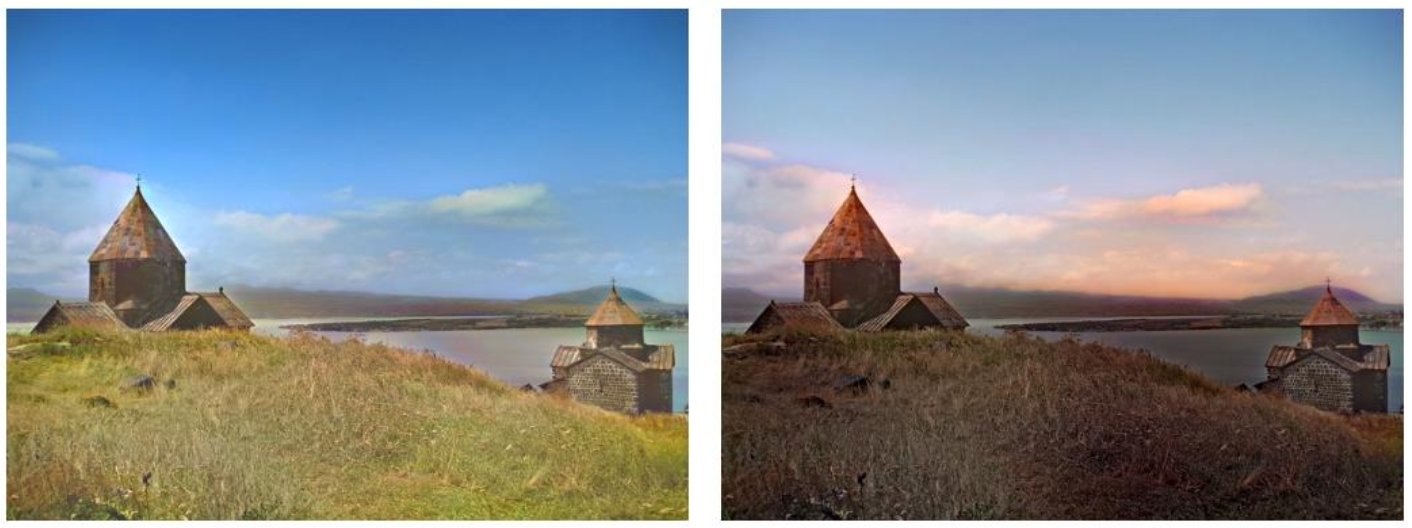

GANs have shown great capacity to manipulate and generate new images and image sequences from described attributes. Manipulating Attributes of Natural Scenes via Hallucination, a research paper published in February 2020 in the journal ACM Transactions on Graphics and co-authored by Levent Karacan, Zeynep Akata, Aykut Erdem, and Erkut Erdem explores the generation and manipulation of nature scenes through a process of neural network image hallucination (Levent Karacan et al., ‘Manipulating Attributes of Natural Scenes via Hallucination’, ACM Transactions on Graphics 39, no. 1 (11 February 2020): 1–17, link 12). The results (example image below) are astonishing.

GAN image manipulation example (Levent Karacan et al., ‘Manipulating Attributes of Natural Scenes via Hallucination’, ACM Transactions on Graphics 39, no. 1 (11 February 2020): 1–17, https://doi.org/10.1145/3368312)

Another paper, Adversarial Video Generation on Complex Datasets, published by Google DeepMind researchers Aidan Clark, Jeff Donahue, and Karen Simonyan in 2019, demonstrates excellent potential for GAN based video generation. In this paper, through the GAN framework, researchers successfully trained an AI agent to produce 256x256 pixel resolution videos of a duration of 48 frames (Aidan Clark, Jeff Donahue, and Karen Simonyan, ‘Adversarial Video Generation on Complex Datasets’, 15 July 2019, link 13). Their results (example image below) are promising.

Tacotron: voice modelling and speech synthesis

In Natural TTS Synthesis by Conditioning WaveNet on Mel Spectrogram Predictions, a computation and language paper published by researchers at Google and the University of California, Berkeley first published in December 2017 researchers describe Tacotron 2: “a neural network architecture for speech synthesis directly from text” (Jonathan Shen et al., ‘Natural TTS Synthesis by Conditioning WaveNet on Mel Spectrogram Predictions’, 16 December 2017, link 14). In a post to the Google AI Blog (ai.googleblog.com) from around the same time, software engineers Jonathan Shen and Ruoming Pang summarise the systems’ ability to generate human-like speech from text using embedded voice models (‘Tacotron 2: Generating Human-like Speech from Text’, Google AI Blog (blog), accessed 5 June 2020, link 15). The results are often incredibly impressive, although the system has difficulty accurately synthesising words where the tonal accent position is ambiguous (Shen et al., ‘Natural TTS Synthesis by Conditioning WaveNet on Mel Spectrogram Predictions’).

MuseNet & FlowMachines: music generation

MuseNet is a deep neural network developed by OpenAI that can generate four-minute-long musical compositions for a selection of ten instruments. By training the system on hundreds of thousands of MIDI files, researchers have taught the network to recognise different patterns of musical styles, harmony, and rhythms (‘MuseNet’, OpenAI, 25 April 2019, link 16). MuseNet can generate music from a reference, from style, or rhythm prompts, or without any input whatsoever (Ibid). Its output could easily be mistaken for human work. MuseNet music samples are available on the OpenAI website, openai.com.

Much like MuseNet, FlowMachines is a music generation neural network. Developed by scientists at Sony CSL Paris and Pierre and Marie Curie University (UPMC), the program garnered much attention in 2016 for composing two music pieces now freely available to listen to on YouTube (Mr Shadow: A Song Composed by Artificial Intelligence, accessed 5 June 2020, link 17). FlowMachines creates music much more complex than MuseNet, but its music generation is more recognisably machine-like than its OpenAI counterpart (example song linked below).

Audiovisual media distribution:

Targeted recommendations and social network advertising

Many online music and video streaming services use machine learning to generate media recommendations for clients algorithmically. Among others, this is the case for Netflix, whose recommendation system analyses viewer data in combination with the characteristics of viewed content (storyline, character makeup, genre, mood, etc.) (Libby Plummer, ‘This Is How Netflix’s Top-Secret Recommendation System Works’, Wired UK, 22 August 2017, link 18). Similarly, most social media platforms farm user personal information to generate elaborate consumer profiles through big data, allowing them to better target users through advertisements (Kalev Leetaru, ‘Social Media Companies Collect So Much Data Even They Can’t Remember All The Ways They Surveil Us’, Forbes, accessed 5 June 2020, link 19).

Audiovisual media consumption

AI agents that produce images and sound learn through datasets of the same audio and visual material. This applies to all AI agent examples described under the Audiovisual media production section of this paper. Artificial neural networks far outpace humans at consuming audiovisual content, but the implications of content reading are different for human and AIs. While AI’s may be able to process content much faster and more efficiently than human beings, they do not currently possess any means to understand the content that they consume. Nonetheless, AI systems often surpass humans at extracting specific information from content.

Watch, Listen, Attend and Spell: AI audiovisual media information extraction

Watch, Listen, Attend and Spell (abbreviated as WLAS), released in 2016 is a machine learning algorithm developed by researches at Google DeepMind to lip-read human beings on video. The program, trained on news footage from the BBC, can read and caption video content containing a visible speaker with 46.8 per cent accuracy, 34.4 per cent higher than human professionals (James Vincent, ‘Google’s AI Can Now Lip Read Better than Humans after Watching Thousands of Hours of TV’, The Verge, 24 November 2016, link 20).

Concluding statement

What is a human task? Historically, what has fallen within the umbrella are tasks that human beings could not delegate to other animals or machines. As AI agents continue to improve, how will our current understanding of what is or is not a human task change? As AI agents develop, the roles they play in our day-to-day lives will continue to expand. Which tasks will AIs assist in, and which will they take over? It is still too early to tell. Despite the impressive abilities of some AI agents, it seems unlikely that generalised AI involvement in audiovisual media PDC is around the corner. Perhaps it is, but then again, probably not.